Finding Winning Stocks with Web Scrapers, and Serverless (AWS Lambda and DynamoDB)

A few friends and I chat about stocks, share ideas, and encourage each other. A few months ago, I realized we needed some automation to help us find winners. I chose to use a serverless solution to build this system.

There are many good stocks and finding them takes time. We can find them by reading articles, using stock tools, getting tips from Twitter, and many other ways. With so many ways to find stock candidates, we needed to define the process.

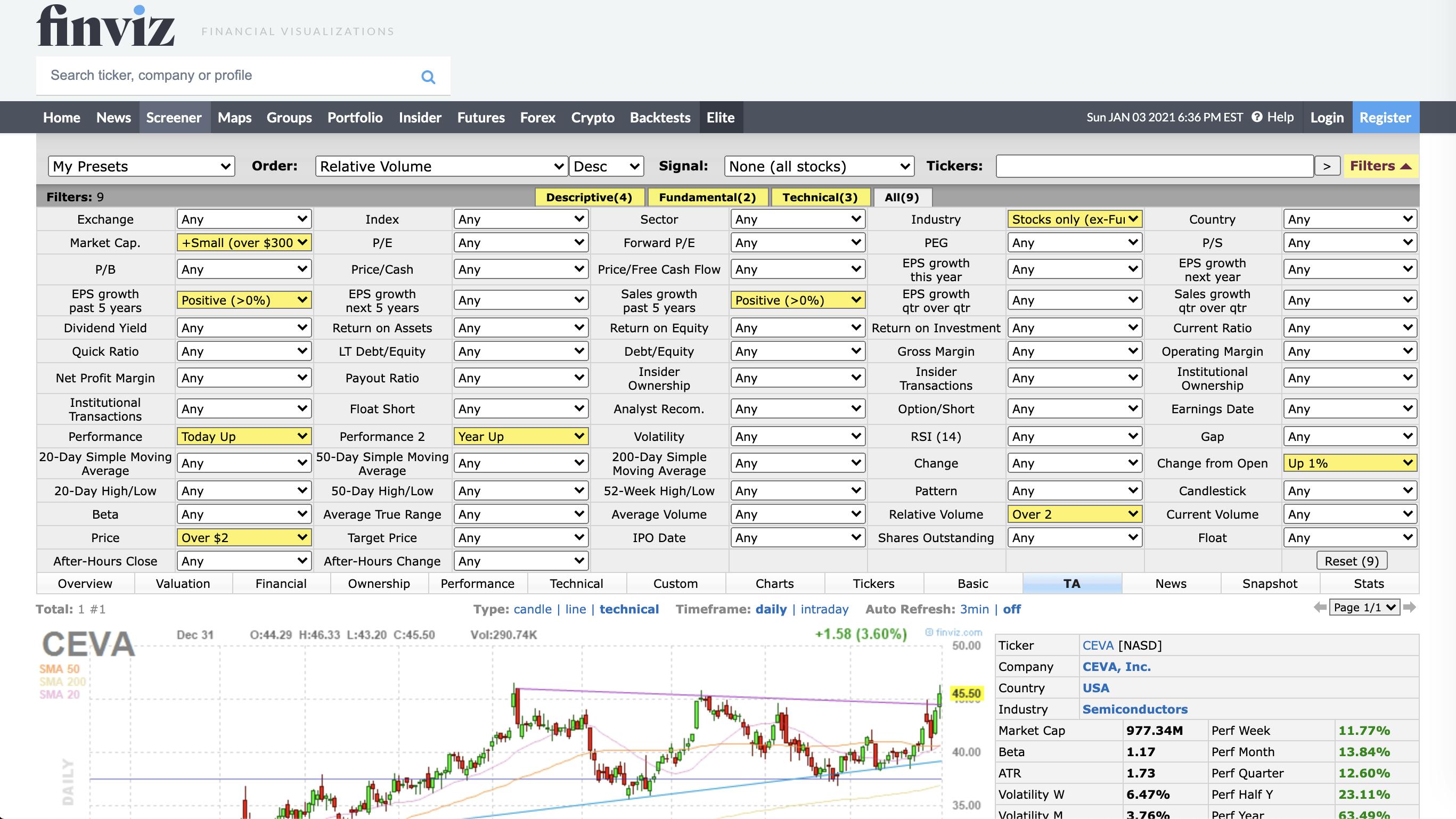

Finding a Screener

We decided FinViz.com was a good source to start our automation. One of our team members is a good stock analyst. He created a screener that we used for a long while.

Example FinViz Screener

Creating a Web Scraper

After a while, we noticed we were forgetting to check this screener. That is when automation became handy. I wrote a Python web scraper using BeautifulSoup to get the top 10 stock symbols from the screener.

import requests

from bs4 import BeautifulSoup

FINVIZ_BASE = "https://finviz.com"

FINVIZ_PATH = os.environ.get('FINVIZ_PATH')

FINVIZ_DATA = {}

FINVIZ_HEADERS = {

'User-Agent': 'My Trading App/0.0.1'

}

response = requests.get(

f'{FINVIZ_BASE}{FINVIZ_PATH}',

headers=FINVIZ_HEADERS,

data=FINVIZ_DATA

)

soup = BeautifulSoup(response.text.encode('utf8'), 'html.parser')

## look for symbols

for link in soup.find_all('a'):

if link.get('href').startswith('quote.ashx?t='):

symbol = link.string

if symbol:

## assumes this html code

## <a class="tab-link" href="quote.ashx?t=TWTR&ty=c&p=d&b=1">TWTR</a>

symbols.append(symbol)

I needed a way to run this web scraper on a timer. I could have set up a server to run the Python code on a CRON, but I did not want to maintain the server. I decided to use a serverless solution to reduce maintenance and keep my costs low.

I set up an AWS Lambda function with a Python runtime, and deployed it using AWS CDK. I configured CloudWatch rules to set up a CRON to trigger the Lambda function. Now the web scraper runs per the schedule.

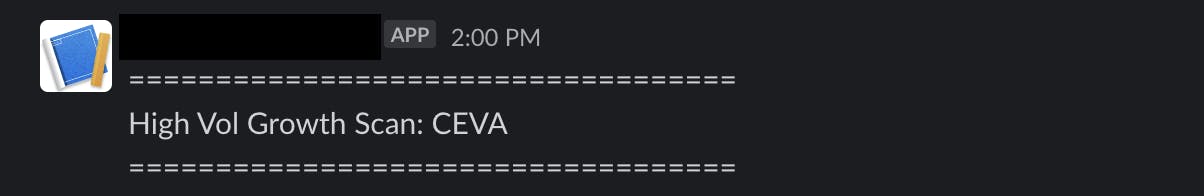

Posting to Slack

We were already using Slack to chat, so it was the ideal medium to post the top ten symbols. I created a Slack app that posted the findings to an #alerts channel and the #general channel. The #alert channel posts had detailed information (e.g., chart images). The #general channel posts had the summary information. We did this to not overwhelm the discussion in the #general channel.

Example of the #alert channel post.

Example of the #general channel post.

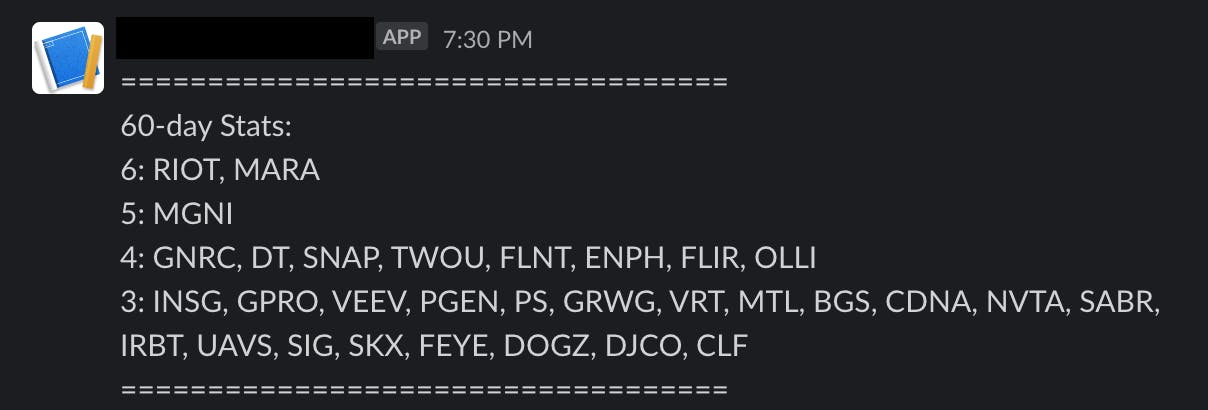

Creating stats

As you might have guessed, it became difficult to see patterns without some type of stats. We had good intel, but how do we decide which stocks to pick without some type of histogram.

We wrote the stock symbols to a DynamoDB table, and the date when they appeared in the alert.

import boto3

STATS_TABLE_NAME = os.environ.get('STATS_TABLE_NAME')

STATS_TTL_NAME = os.environ.get('STATS_TTL_NAME')

STATS_TTL_DAYS = int(os.environ.get('STATS_TTL_DAYS')

client = boto3.client('dynamodb')

for symbol in symbols:

client.update_item(

TableName=STATS_TABLE_NAME,

Key={

'PK': {

'S': symbol,

},

'SK': {

'S': f'#{sk_prefix}#',

},

},

AttributeUpdates={

STATS_TTL_NAME: {

'Value': {

'N': f'{ttl}'

},

'Action': 'PUT',

},

scan_name: {

'Value': {

'BOOL': True,

},

'Action': 'PUT',

},

},

)

We then created another Lambda function to post a text-based histogram.

Example histogram post to the #general channel.

Conclusion

With a little code we implemented automation to our manual investment process. We were able to extend this approach to scrape different investment sources. Furthermore, it costs $0.00 per month by taking advantage of the serverless capabilities in the AWS free tier.

Before you go

About the author

Disclaimer: This is NOT investment advice.

Photo by William Iven on Unsplash